Following on from the crushing numbers from Credo Technology, Broadcom, a sector giant in networking, semiconductors and much else, has also blown past forecasts. It has also fired a shot over the bows of Nvidia, whose shares have dropped in response to the great numbers from Broadcom.

Broadcom, a company built on a string of successful acquisitions, is emerging as a key player in the AI space.

In our fiscal Q3 2025, total revenue was a record $16 billion, up 22% year-on-year. Now revenue growth was driven by better-than-expected strength in AI semiconductors and our continued growth in VMware. Q3 consolidated adjusted EBITDA was a record $10.7 billion, up 30% year-on-year. Now looking beyond what we are just reporting this quarter, with robust demand from AI, bookings were extremely strong. And our current consolidated backlog for the company hit a record of $110 billion.

Hok Tan, CEO, Broadcom, Q3 2025, 4 September 2025

Within these numbers, networking is a sweetspot.

Turning to AI and networking. Demand continued to be strong because networking is becoming critical as LLMs continue to evolve in intelligence and compute clusters have to grow bigger. The network is the computer, and our customers are facing challenges as they scale to clusters beyond 100,000 compute nodes. For instance, scale up, which we all know about, is a difficult challenge when you’re trying to create substantial bandwidth to share memory across multiple GPUs or XPUs within a rack. Today’s AI rack scales up a mere 72 GPUs at 28.8 terabit per second bandwidth using proprietary NVLink. On the other hand, earlier this year, we have launched Tomahawk 5 with open Ethernet, which can scale up 512 compute nodes for customers using XPUs.

Moving on to scaling out across racks. Today, the current architecture using 51.2 terabit per second requires 3 tiers of networking switches. In June, we launched Tomahawk 6 and our Ethernet-based 102 terabit per second switch, which flattens the network to 2 tiers, resulting in lower latency, much less power. And when you scale to clusters beyond a single data center footprint, you now need to scale computing across data centers. And over the past 2 years, we have deployed our Jericho3 Ethernet router with hyperscale customers to just do this. And today, we have launched our next-generation Jericho4 Ethernet fabric router with 51.2 terabit per second deep buffering intelligent congestion control to handle clusters beyond 200,000 compute nodes crossing multiple data centers.

We know the biggest challenge to deploying larger clusters of compute for generative AI will be in networking. And for the past 20 years, Broadcom has developed for Ethernet networking that is entirely applicable to the challenges of scale up, scale out and scale across in generative AI.

Hok Tan, CEO, Broadcom, Q3 2025, 4 September 2025

Here is one explanation of why Broadcom and Nvidia shares were moving in opposite directions on Friday.

Broadcom has been expanding its serviceable addressable market for its AI chips, designed to handle AI workloads, which could reach a staggering $90 billion by fiscal 2027. Broadcom expects its hyperscale customers to double accelerator cluster sizes from 500,000 to 1 million to improve performance and lower costs. An accelerator cluster is basically a group of servers equipped with custom AI chips. As data centers grow in size and sophistication, they will require more chips to handle increasingly complex AI workloads.

Arguably, the most exciting element of Broadcom’s investment thesis is the potential for hyperscalers to outfit data centers with more and more custom-made chips designed by Broadcom rather than graphics processing units (GPUs). GPUs, like those made by Nvidia, are like all-purpose workhorses that are really good at handling complex AI workloads. By comparison, ASICs, as the name “application-specific” entails, are meant to handle a specific function really well, often at lower cost.

ASICs won’t replace GPUs across all data center applications, but there is a possibility that Broadcom’s custom AI chips, along with associated networking gear, like Broadcom’s Tomahawk and Jericho switches, and compute, memory, and packaging capabilities, will become a preferred solution among hyperscalers. Management’s commentary on Broadcom’s AI chip business is worth following on the upcoming earnings call, especially within the context of Nvidia’s booming AI business.

The Motley Fool, 3 September 2025 (before Broadcom reported)

The stock market’s focus on a handful of great AI stocks, the Ten Titans, is becoming ever more marked. The Ten Titans have all been frequently recommended by Quentinvest. They are:-

Nvidia, Microsoft, Apple, Amazon, Alphabet, Meta Platforms, Broadcom, Tesla, Oracle, and Netflix.

Broadcom’s AI business is on fire.

Last quarter when I said, hey, the trend of growth of ’26 will mirror that of ’25, which is 50%, 60% year-on-year, that’s really all I said. I didn’t quantify it. Of course, it comes at 50%, 60% because that’s what ’25 is. All I’m saying, if you want to put another way of looking at what I’m saying, which is perhaps more accurate, is we’re seeing the growth rate accelerate as opposed to just remain steady at that 50%, 60%. We are expecting and seeing 2026 to accelerate more than the growth rate we see in ’25. And I know you love me to throw in a number at you. But you know what? We are not supposed to be giving you a forecast for ’26. But the best way to describe it is, it will be a fairly material improvement.

Hok Tan, CEO, Broadcom, Q3 2025, 4 September 2025

Broadcom introduces us to what, for me, is a new acronym.

A big part of this driver of growth will be XPUs. It comes from the fact that we continue to gain share at our 3 original customers. Thereto, they’re on their journey and each passing generation, they go more to XPUs. So we are gaining share from these 3. We now have the benefit of an additional fourth significant customer — I should say fourth and very significant customer. And that combination will mean more XPUs. And as I said, as we create more and more XPUs among 4 guys, the networking — we get the networking with these 4 guys, but now the mix of networking from outside these 4 guys will now be smaller, be diluted, be a smaller share. So I expect actually networking percentage of the pool to be a declining percentage going into ’26.

Hok Tan, CEO, Broadcom, Q3 2025, 4 September 2025So what are XPUs?

CPUs are still running their course and have served us well over the years. Moore’s law is an observation that the number of transistors on an integrated circuit double every two years. Its hard to conceive that CPUs approaching 50 Billion transistors can be a 100 Billion by this time next year. The transistor is getting close to the size of an atom and according to some scientists, we still have a way to go before the physical limits would prevent the reduction in size or higher operating speeds.

CPUs are incredibly versatile, relatively cheap and for the most part, this general purpose processing unit has done us well. I am sure you heard the old adage: “if you only have a hammer, then everything looks like a nail.” CPUs are the hammer and because of its versatility it has been applied to just about every nail.

But why not use the right tool for the job? Sure, you can perform image enhancement on a CPU, but GPUs are specifically designed to manipulate graphics. Based on the task, the speed difference could be staggering.

Entering the age of XPUs

The “X” in “XPU” stands for any compute architecture that best fits the need of your application. xPUs are specialized processing units that are designed and optimized to handle specific workloads such as artificial intelligence, machine learning, and high-performance computing. XPUs are becoming increasingly popular due to their ability to accelerate specific workloads and offer improved performance over traditional CPUs.

GPUs are very popular now. You can’t help but see how the GPU is radically changing the landscape of Artificial Intelligence. But there are many other xPUs coming to life that can offer incredible processing speeds for specific tasks. Here are just a few:

ASICs (Application Specific Integrated Circuits), FPGAs (Field Programmable Gate Arrays), TPU (Tensor Processing Unit), NPU (Neural Processing Unit), IPU (Intelligent Processing Unit), DPU (Data Processing Unit), and I recently read a paper on a proposed BPU (Block Chain Processing Unit). This is obviously, not an exhaustive list of alternative processing units, but you maybe see that we have now entered the age of XPUs.

By offloading task specific processing from the host computer’s CPU, we are able to free up the CPU to do other things. Data is growing at incredible rates and data processing needs are growing along with it. The future of compute will be one by which we optimize pipelines by running tasks on devices specifically designed for it.

Strengths of XPUs:

Some workloads are better served with traditional CPUs. However XPUs offer significant performance gains over traditional CPUs. for some specific tasks. XPUs can handle large amounts of data and compute-intensive tasks more efficiently than a traditional CPU, making them ideal for use in applications such as artificial intelligence and machine learning.

Limitations of XPUs:

One of the main limitations of XPUs is that they are not that straightforward to use. Writing functions for these devices are still somewhat complex and require a deeper level of understanding of the hardware. Programming for xPUs can be challenging at times and often requires a paradigm shift in thinking to fully exploit. However, the performance gains achieved are well worth the effort from both a performance and cost savings perspective.

Future of XPUs:

As the demand for high-performance computing and AI continues to grow, XPUs will play a critical role in meeting these demands. XPUs will be used in a wide range of applications from artificial intelligence to image processing. The continued development of XPUs will lead to more efficient and powerful accelerators allowing for more complex AI models to be trained and deployed faster, The benefit of XPUs deployed in real-world applicatios also have the added benefit of reducing energy consumption and carbon emissions.

In conclusion, XPUs offer significant benefits in terms of performance and energy efficiency for specific workloads. XPUs will continue to be an important component of high-performance computing and AI applications, It’s just a matter of time before it becomes mainstream.

Charlie Wardell, IT specialist, 2025

Growing demand for XPUs and for faster and more complex networking plays to the strengths of Broadcom. The market is huge, so I don’t see this as a major negative for Nvidia.

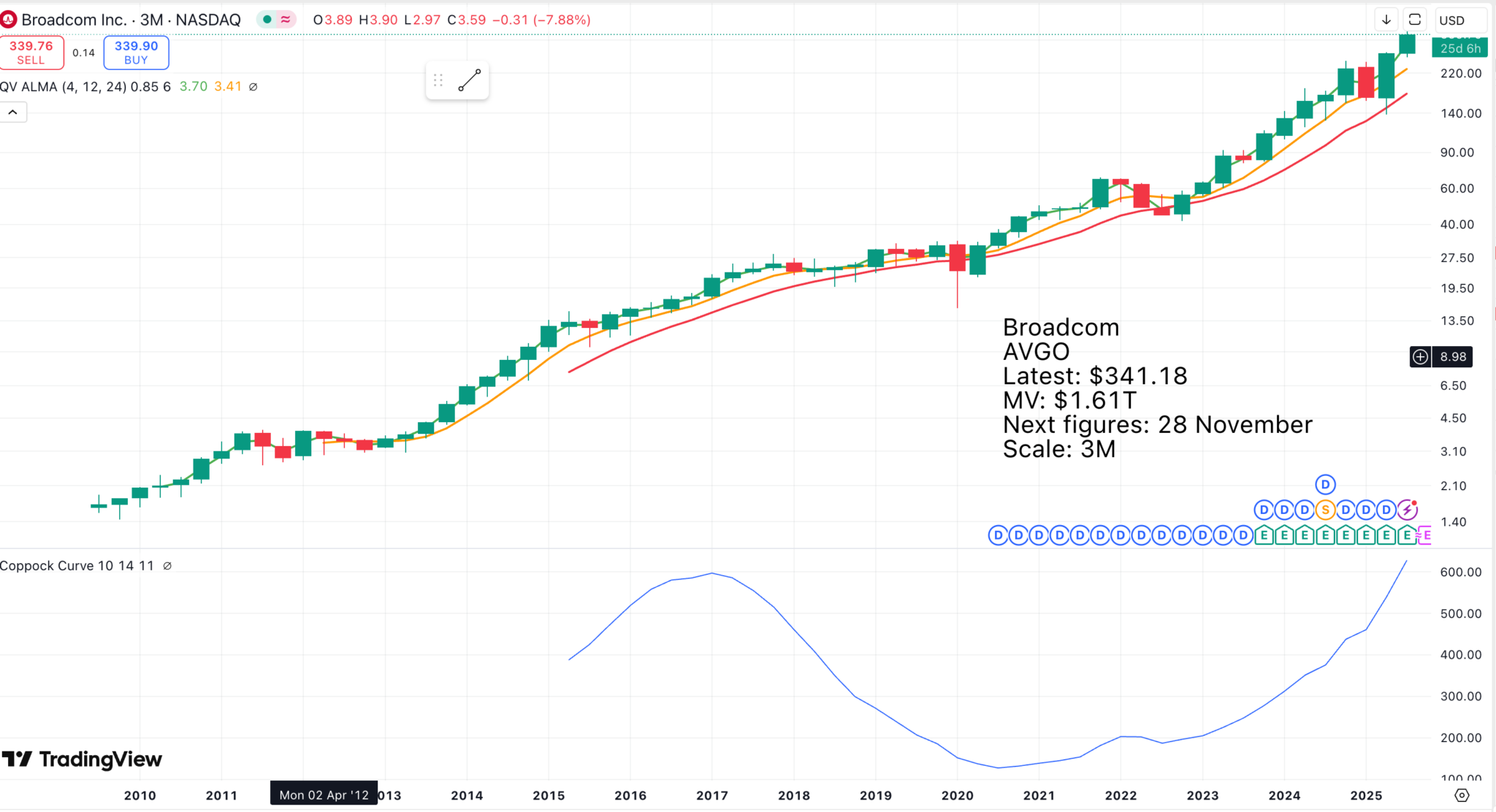

This is a great chart for a great stock. The clue is in the name.

Arista Networks is an industry leader in data-driven, client-to-cloud networking for large AI, data center, campus and routing environments. Its award-winning platforms deliver availability, agility, automation, analytics, and security through an advanced network operating stack.

Arista was founded by industry luminaries Andy Bechtolsheim, Ken Duda and David Cheriton, launched in 2008 and is led by CEO Jayshree Ullal. Its seasoned leadership team is globally recognized as respected leaders and visionaries with a rich and extensive history in networking and innovation.

The company went public in June 2014, is listed on the New York Stock Exchange (ANET), and currently has more than 10,000+ customers worldwide.

Arista Networks website, 2025

Arista is a phenomenally exciting company.

Reviewing our midyear inflection point, our conviction with AI and Cloud Titans and enterprise customers has only strengthened. We began the year with a pragmatic guide of 17% growth or $8.2 billion annual revenue. But as the year has progressed, we recognize the potential to build a truly transformational networking company, addressing a massive total available market. This feels to us like a unique once-in-a-lifetime opportunity. We, therefore, raised our 2025 annual growth forecast to 25%, now targeting $8.75 billion in revenue, which is an incremental $550 million more due to our increased momentum that we are experiencing across AI, cloud and enterprise sectors.

Jayshree Ullal, CEO, Arista Networks, Q2 2025, 5 August 2025

Arista sits at the heart of the booming AI networking market.

Arista’s flagship Etherlink and EOS are key hallmarks of scale-out networking with a wide breadth and depth of network protocol support. Introduced in 2024, Arista’s Etherlink portfolio is now 20-plus products with the most comprehensive and complete solution in the industry, especially for scale-out back-end and scale-out front-end networking. It highlights our accelerated networking approach, bringing a single point of network control and visibility differentiation and improved GPU utilization. Poor networks and bottlenecks lead to idle cycles on GPUs, wasting both capital GPU costs and operational expenses such as power and cooling.

With a 30% to 50% processing time spent in exchanging data over networks and GPU, the economic impact of building an efficient GPU cluster with good networking improves utilization, and this is super paramount. Our stated goal of $750 million back-end AI networking is well on track and gaining from nearly 0 revenue 3 years ago in 2022 to production deployments this year in 2025. As a reminder to you all, the back-end AI is all incremental revenue and incremental market share to Arista. As large language models continue to expand into distributed training and inference use cases, we expect to see the back-end and the front-end converge and call us more together. This will make it increasingly difficult to parse the back-end and the front-end precisely in the future, but we do expect our aggregate AI networking revenue to be ahead of the $1.5 billion in 2025 and growing in many years to come. We will elaborate more on this in our Analyst Day in September, including our AI strategy and forecast.

What is crystal clear to us and our customers is that Arista continues to be the premier and preferred AI networking platform of choice for all flavors of AI accelerators. While the majority today is NVIDIA GPUs, we are entering early pilots connecting with alternate AI accelerators, including start-up XPUs, the AMD MI series and in AI and Titan customers [the 10 Titans] who are building their own XPUs.

As we continue to progress with our four top AI Titan customers, AI is also spreading its wings into the enterprise and Neocloud sectors, and we are winning approximately 25 to 30 customers to date. The rise in Agentic AI ensures any-to-any conversations with bidirectional bandwidth utilization. Such AI agents are pushing the envelope of LAN and WAN traffic patterns in the enterprise.

Jayshree Ullal, CEO, Arista Networks, Q2 2025, 5 August 2025

Share Recommendations

Broadcom AVGO

Arista Networks ANET

Strategy – 2 Shares to Buy

Arista Networks has the mix of 3G (great chart, great growth, great story) and that special magic that I look for. I am adding it to my Top 20 list, which becomes 25 shares (Broadcom is already in the list). I am also thinking about a new name for this elite group of shares.

Broadcom is the gift that keeps on giving, with its relentlessly outperforming shares.