Nvidia NVDA and Broadcom AVGO may be positioned for potential gains in 2026, Cantor Fitzgerald said Wednesday, following a period of recent weakness in semiconductor stocks.

Analyst C.J. Muse noted that AI-related stocks have faced pressure since early November amid broader market caution and concerns over an AI valuation bubble. Muse said these concerns might be overstated, given growing demand tied to AI infrastructure.

Nvidia shares are trading at roughly 16 times Cantor’s earnings estimates. The rollout of next-generation hardware, the Rubin GPU architecture and the custom Vera CPU, which together form the Vera Rubin platform, is expected to support massive-context AI workloads and data center growth.

Broadcom is valued around 26 times Cantor’s earnings estimates. Muse highlighted the company’s networking business and its role in supporting Google GOOG tensor processing units, which could further benefit from AI-driven infrastructure spending.

The firm reiterated Nvidia and Broadcom as top picks for 2026, suggesting investors consider establishing positions ahead of anticipated AI-related growth, according to a Wednesday press release.

Gurufocus, 27 December 2025

Nvidia boasts a stunning chart, stunning fundamentals, an incredible story, and a CEO for the ages in Jensen Huang. Broadcom is pretty cool too and has been momentarily depressed by what is likely to prove short-lived disappointment with the latest results, which, by any normal standards, were amazing.

In our fiscal 2025, consolidated revenue grew 24% year over year, to a record $64 billion, driven by AI semiconductors and VMware. AI revenue grew 65% year over year to $20 billion, driving the semiconductor revenue for this company to a record $37 billion for the year. In our infrastructure software business, strong adoption of VMware Cloud Foundation, or VCF as we call it, drove revenue growth of 26% year on year to $27 billion. In summary, 2025 was another strong year for Broadcom.

And we see the spending momentum by our customers for AI continuing to accelerate in 2026. Now let’s move on to the results of our fourth quarter 2025. Total revenue was a record $18 billion, up 28% year on year and above our guidance on better than expected growth in AI semiconductors as well as infrastructure software. Q4 consolidated adjusted EBITDA was a record $12.12 billion, up 34% year on year. So let me give you more color on our two segments. In semiconductors, revenue was $11.1 billion as year on year growth accelerated to 35%. This robust growth was driven by AI semiconductor revenue of $6.5 billion, which was up 74% year on year.

This represents a growth trajectory exceeding 10 times over the eleven quarters we have reported this line of business. Our customer accelerated business more than doubled year over year as we see our customers increase adoption of XPUs, as we call those customer accelerators, in training their LLMs* and monetizing their platforms through inferencing APIs** and applications. These XPUs, I may add, are not only being used to train and inference internal workloads by our customers. The same experience in some situations has been extended externally to other LLM peers. Best exemplified at Google where the TPUs*** used in creating Gemini are also being used for AI cloud computing by Apple, Cohere, and SSI as a sample.

*LLMs, or Large Language Models, are advanced AI systems trained on massive text datasets to understand, generate, and process human language, enabling tasks like writing, summarizing, translating, coding, and powering chatbots, essentially acting as sophisticated text predictors that learn complex patterns to produce coherent, human-like responses. They are a core component of Generative AI, using deep learning, often Transformer architectures, to handle complex language nuances and context.

**An API, or application programming interface, is a set of rules or protocols that enables software applications to communicate with each other to exchange data, features and functionality.

***TPUs (Tensor Processing Units) are specialized processors designed by Google to accelerate machine learning (ML) and AI workloads, handling complex matrix multiplications efficiently for deep learning tasks like language models (Gemini), image recognition, and search, offering huge performance gains over CPUs/GPUs for AI, and are available via Google Cloud for developers to power scalable AI applications.

The scale at which we see this happening could be significant. As you are aware, last quarter, Q3 2025, we received a $10 billion order to sell the latest TPU ironwood racks to Anthropic****. This was our fourth customer that we mentioned. In this quarter Q4, we received an additional $11 billion order from this same customer for delivery in late 2026. But that does not mean our other two customers are using TPUs. In fact, they prefer to control their own destiny by continuing to drive their multiyear journey to create their own custom AI accelerators or XPU RECs***** as we call them.

****Anthropic PBC is an American artificial intelligence (AI) company founded in 2021. It has developed a family of large language models (LLMs) named Claude. The company researches and develops AI to “study their safety properties at the technological frontier” and use this research to deploy safe models for the public.[6]

Anthropic was founded by former members of OpenAI, including siblings Daniela Amodei and Dario Amodei, who serve as president and CEO respectively.[7] In September 2023, Amazon announced an investment of up to $4 billion. Google committed $2 billion the next month.[8][9][10] As of November 2025, Anthropic is valued at over $350 billion.

*****XPU” is a generic, catch-all term for specialized processing units (e.g., GPUs, TPUs, ASICs) designed to handle specific, compute-intensive workloads more efficiently than a general-purpose CPU, particularly for Artificial Intelligence (AI) and High-Performance Computing (HPC). There is no single, standardized definition across the industry.

I am pleased today to report that during this quarter, we acquired a fifth XPU customer through a $1 billion order placed for delivery in late 2026. Now moving on to AI networking. Demand here has even been stronger as we see customers build out their data center infrastructure ahead of deploying AI accelerators. Our current order backlog for AI switches exceeds $10 billion as our latest 102 terabyte terabit per second Tomahawk six switch, the first and only one of its capability out there, continues to book at record rates. This is just a subset of what we have. We have also secured record orders on DSPs, (digital signal processors) optical components like lasers, and PCI Express****** switches to be deployed in AI data centers. All these components combined with our XPUs bring our total order on hand in excess of $73 billion today, which is almost half Broadcom’s consolidated backlog of $162 billion. We expect this $73 billion in AI backlog to be delivered over the next eighteen months. In Q1 fiscal 2026, we expect our AI revenue to double year on year to $8.2 billion.

******PCI Express (PCIe) switches are intelligent hardware components that expand PCIe connectivity, acting like network switches for high-speed data, allowing more devices (GPUs, SSDs, NICs) to connect to a system than the motherboard’s direct slots allow, by routing data efficiently through dedicated point-to-point links, crucial for performance in data centers, servers, and AI systems. They create scalable, non-blocking pathways, enabling multiple devices to communicate simultaneously without bandwidth bottlenecks, unlike older shared bus systems.

Hok Tan, CEO, Broadcom, Q4 2025, 12 December 2025

Yeah, I know – that was disappointing!

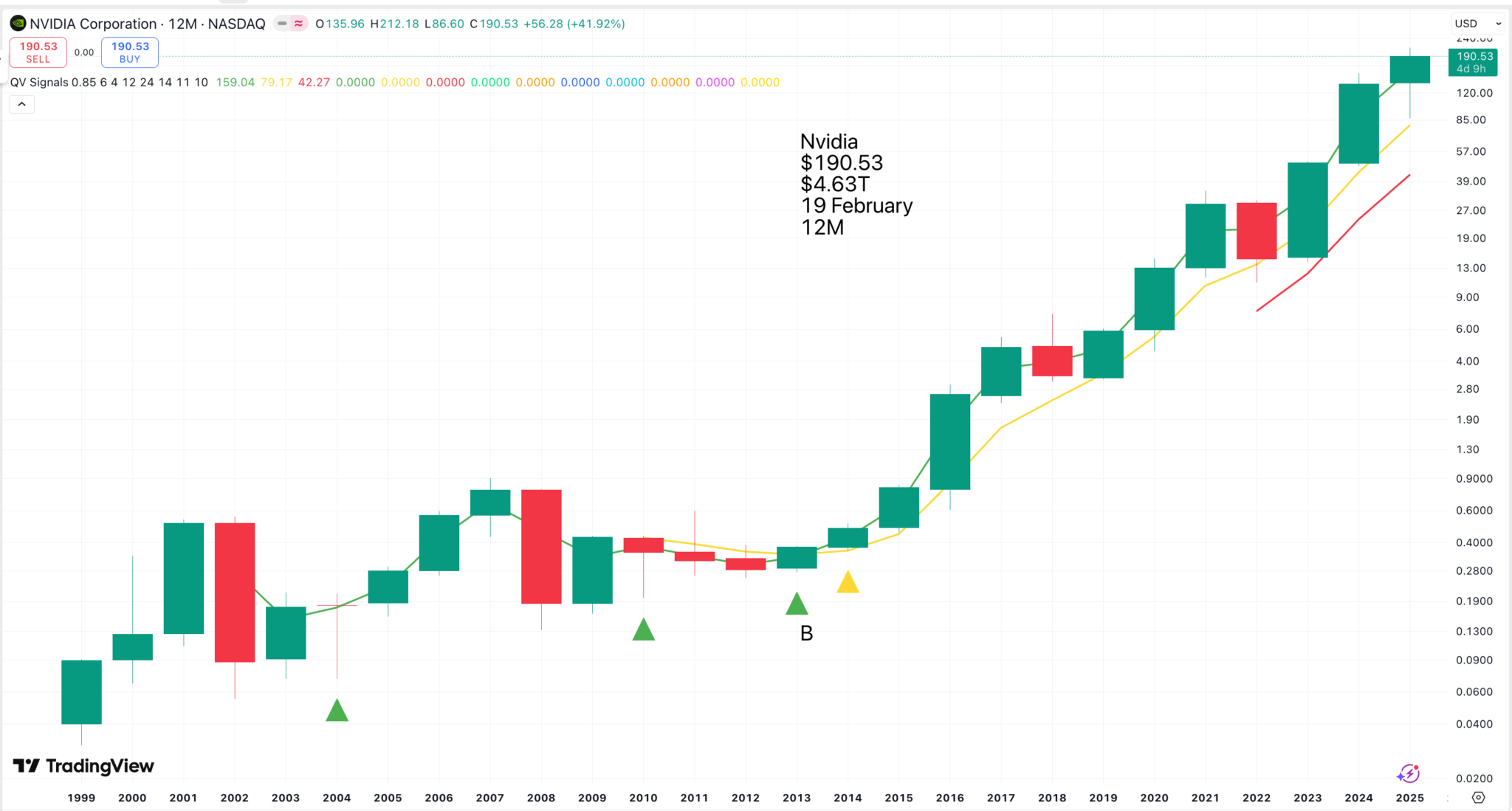

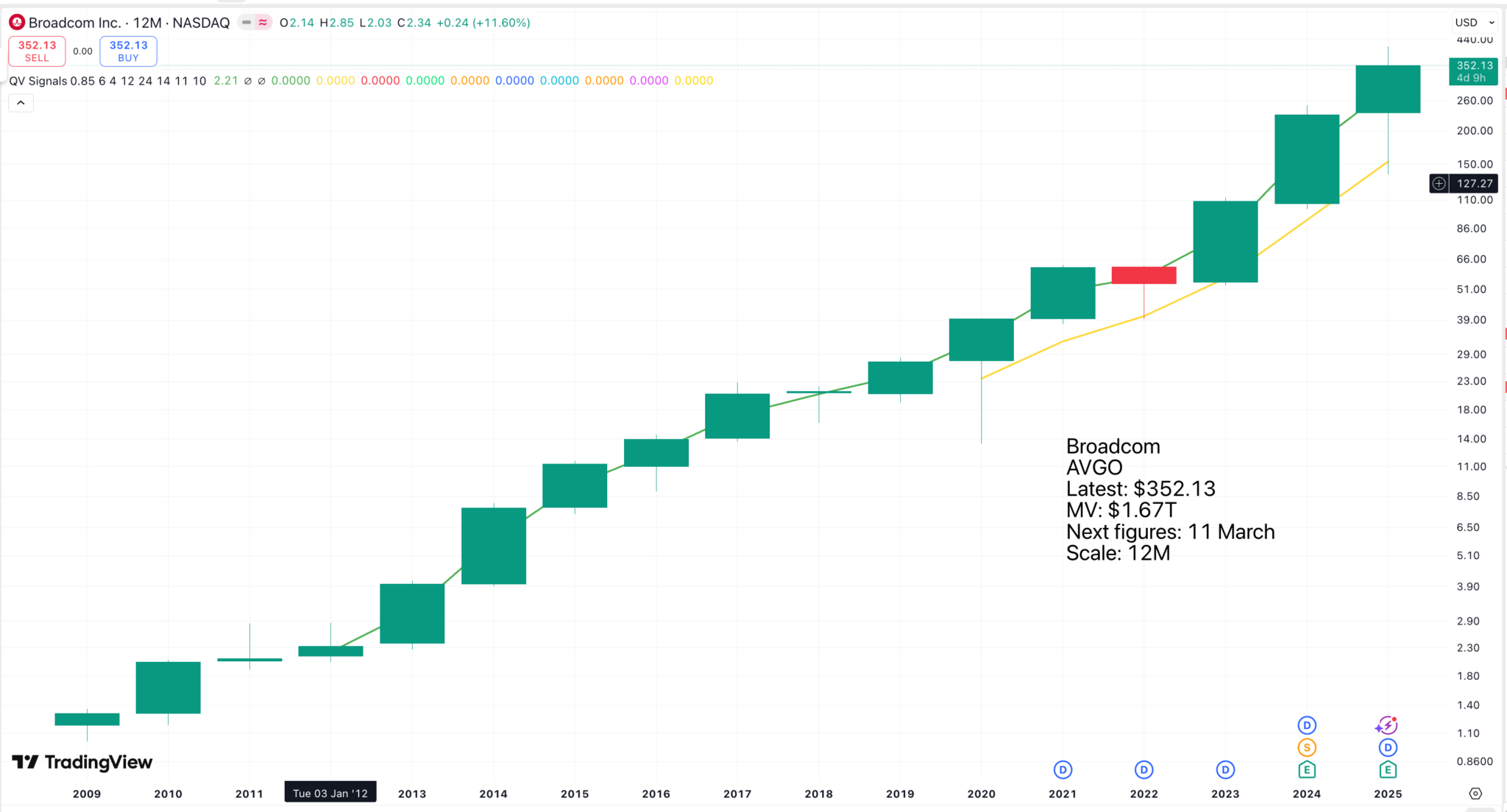

Note that for both of these shares, in recent times, down years have been rare and have invariably been followed by periods of strong growth.

Share Recommendations

Nvidia. NVDA

Broadcom. AVGO

Merry Christmas and a Happy and Prosperous New Year to all my subscribers. 2026 is going to be a brilliant year. Make sure to place your orders for those limited-edition Ferraris in good time. While you wait for delivery, eat, drink, take plenty of exercise, and be merry.